AI in cyberattacks is no longer science fiction — it’s a rapidly growing threat where artificial intelligence is used to automate, scale, and amplify cybercrime.In the ever-evolving landscape of cybersecurity, artificial intelligence (AI) has emerged as a transformative force, not just for defense, but significantly for offense. While AI promises to bolster our digital fortresses, its dual-use nature means it’s also rapidly becoming the enterprising adversary’s most potent weapon. Cybercrime has shed its amateur skin, morphing into a highly efficient, business-like operation where threat actors leverage automation, AI, and sophisticated social engineering to achieve their objectives with unprecedented speed and scale. The average eCrime breakout time has plummeted to a mere 48 minutes, with the fastest recorded at a staggering 51 seconds, a stark reminder of the rapid pace of modern attacks.

The Rise of the AI-Powered Deceiver: Social Engineering Supercharged

One of the most immediate and impactful ways AI is amplifying offensive capabilities is in the realm of human-targeted attacks, particularly social engineering. Generative AI (GenAI) has proven to be a significant force multiplier for malicious activities across all major adversary categories—nation-state actors, eCrime groups, and hacktivists. The easy access to commercial Large Language Models (LLMs) has shortened their learning curve and development cycles, allowing them to increase the scale and pace of their operations.

This technological leverage enables the creation of highly convincing fictitious profiles, AI-generated emails, and deceptive websites, which are then used to supercharge insider threats and social engineering attacks. The real-world impact is already substantial: studies reveal a 135% increase in social engineering attacks and a 260% surge in voice phishing (vishing) following the widespread adoption of GenAI tools like ChatGPT. In fact, vishing saw an explosive 442% growth in the second half of 2024 alone. Malicious AI systems, such as FraudGPT and WormGPT, are openly traded in underground markets, specifically designed to craft tailored and highly persuasive phishing messages. This has led to a significant increase in identity theft and social manipulation.

Case in Point: The $330 Million Bitcoin Heist

The human element has become the perceived path of least resistance for attackers. As AI-powered deception becomes increasingly convincing, human judgment is challenged, making individuals the new primary target for exploitation. This shift is evidenced by the fact that 68% of all breaches involve a non-malicious human element, such as errors or social engineering.

A chilling example of this trend occurred on April 30, 2025, when a significant social engineering scheme resulted in $330.7 million worth of Bitcoin (BTC) being stolen from an elderly U.S. individual’s wallet. This incident highlights how social engineering tactics like “address poisoning” don’t require complex hacking; instead, they trick victims into sending assets to fraudulent wallet addresses. In 2024, phishing scams alone cost the crypto industry over $1 billion across 296 incidents, making them the most costly attack vector for the year.

AI in the Arsenal: Malware, Exploitation, and Reconnaissance

Beyond human manipulation, AI is also enhancing the more technical aspects of cyberattacks:

- Malware Generation: Research demonstrates that LLMs can generate functional Windows PE malware, including ransomware, worms, and DDoS tools, with high evasion rates (over 70%). While large-scale real-world AI-generated malware reports are currently more prevalent for simpler attacks like DDoS, early work exists on fine-tuning LLMs for malware mutation to evade detection.

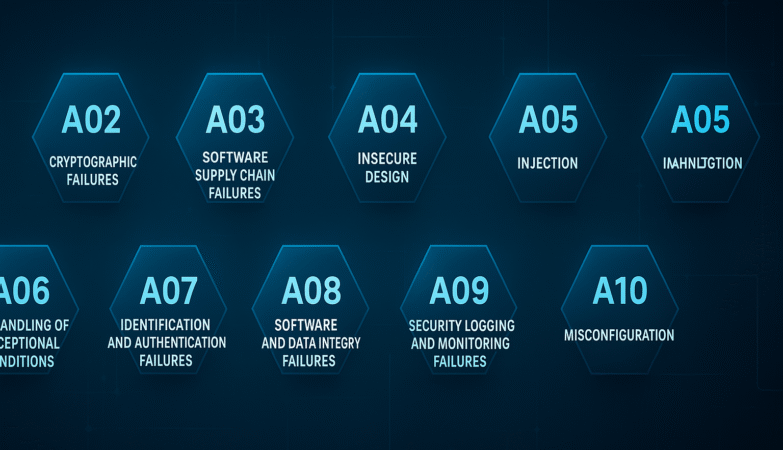

- Vulnerability Identification & Exploitation: LLMs are being leveraged for vulnerability identification across various systems, with real-world attacks already using them for this purpose, such as exploiting MSDT vulnerabilities and SQLite overflow bugs. AI agents have also demonstrated the capability to generate Proof-of-Concepts (PoCs) for memory corruption bugs in C/C++ and can exploit N-day and zero-day vulnerabilities like SQL/XSS injection and remote code execution.

- Reconnaissance: Frontier AI is actively being explored in active scanning, victim information gathering, and open-source database searches. Real-world attacks are increasingly using LLMs for active scanning (e.g., by Forest Blizzard) and information gathering (e.g., by Salmon Typhoon).

- Initial Access: Attacks related to initial access boomed in 2024, accounting for 52% of vulnerabilities observed by CrowdStrike. Adversaries are increasingly leveraging legitimate remote monitoring and management (RMM) tools to gain a foothold into networks, rather than relying solely on malware delivery.

- Cloud-Aware Attacks: CrowdStrike reports a significant rise in cloud-aware cyberattacks and intrusions with AI enhancement, indicating attackers are adapting to modern infrastructure.

The Escalating Financial Toll

The financial consequences of these AI-amplified attacks are staggering. The global average cost of a data breach reached $4.88 million in 2024, marking a 10% increase from the previous year. In the cryptocurrency sector alone, more than $2.1 billion has been stolen in crypto-related attacks so far in 2025, with the majority stemming from wallet compromises and phishing attacks.

The Bybit Exchange Hack: A Record-Breaking Exploit

A prime example of the escalating financial impact is the $1.4 billion Bybit exchange hack on February 21, 2025, attributed to the infamous North Korean Lazarus Group. This incident was described as the largest exploit in crypto history and alone accounted for over 60% of the total value lost in all crypto hacks in 2024, which amounted to $2.3 billion across 760 on-chain security incidents.

The Future Outlook: An Attacker’s Market (for now)

The immediate future suggests that frontier AI will likely benefit attackers more than defenders. This is due to the dual-use nature of AI capabilities, where defensive tools can be repurposed for offensive operations, and inherent attacker-defender asymmetries. Attackers only need one successful exploit, while defenders must secure against all potential threats, leading to a lower failure tolerance for defenders. AI’s probabilistic nature introduces errors, further restricting its deployment in critical defense systems, whereas attackers have a higher error tolerance, allowing them to readily exploit emerging technologies.

Frontier AI is expected to lower attack thresholds, especially for reconnaissance and weaponization, by reasoning with partial information. It can also make attacks more automated and stealthy, enabling automated exploit chain generation. AI’s continuous learning capabilities will allow for automatic evolution and customized attack generation, ensuring that the offensive capabilities continue to adapt and grow.

As adversaries become more organized and effective, running their operations with a business-oriented approach, the cybersecurity community faces an urgent imperative to evolve its defenses. Understanding the depth and breadth of AI’s amplified offensive capabilities is the critical first step in building a more resilient and proactive security posture.

Sources and Related Content

- CrowdStrike. (n.d.). Global Threat Report. Retrieved from https://www.crowdstrike.com/en-us/global-threat-report/

- CrowdStrike. (n.d.). CrowdStrike Global Threat Report 2025. Retrieved from(https://go.crowdstrike.com/rs/281-OBQ-266/images/CrowdStrikeGlobalThreatReport2025.pdf?version=0)

- arXiv. (2025, April 14). Frontier AI’s Impact on the Cybersecurity Landscape (arXiv:2504.05408v2). Retrieved from https://arxiv.org/pdf/2504.05408

- Cointelegraph. (2025, June 4). $2.1B crypto stolen in 2025: hackers exploit human behaviour: CertiK. Retrieved from https://cointelegraph.com/news/2-1b-crypto-stolen-2025-hackers-human-psychology-certik

- N-able. (2025, April 24). Top 10 Cybersecurity Statistics Every MSP and IT Professional Should Know in 2025. Retrieved from https://www.n-able.com/blog/top-10-cybersecurity-statistics-every-msp-and-it-professional-should-know-in-2025

This article really highlights how creativity can evolve through mods like Sprunki Game, especially with fresh beats and new characters that keep the fun alive.

Interesting read! Seeing platforms like jililive prioritize streamlined access & security is key-especially with increasing online activity. Account verification is a must for peace of mind! 🤔

Really insightful article! The focus on skill development is key – I’ve been exploring platforms like ph799 app casino, which emphasizes strategic play & a secure environment. It’s about how you play, not just that you play! 👍

Solid analysis of the game – really digging the strategic plays! Seems like a VIP experience is key these days, even online. Considering a new platform? Check out vipph slot download for premium gaming and exclusive rewards – might be worth a look!

Smart bankroll management is key, especially with so many options now! Seeing platforms like ArionPlay focus on secure KYC is reassuring. Considering the arionplay app casino for a streamlined experience – quick deposits via GCash are a plus!

Interesting read! Probability plays such a huge role in these games, doesn’t it? Thinking about platforms like ph978 casino slot and how they ensure fairness is key. A legit platform is a must-have!

Really enjoying exploring new online games lately! The convenience of platforms like arionplay game, with easy registration & local payment options, is a huge plus. Makes casual play so much simpler! 😉

Interesting analysis! Seeing platforms like boss77 prioritize quick, localized payment options (like GCash) is key for Filipino players. Seamless access really boosts engagement, don’t you think? Good read!

Really enjoying this article! It’s great to see platforms like BossJL making online gaming accessible in the Philippines. Exploring new bossjl games sounds fun – especially with options like Gcash for easy deposits! 👍

Solid article! Thinking about bankroll management really shifts your perspective. Seeing platforms like this bossjl link offer easy deposits via GCash is a smart move for Filipino players – accessibility is key! 👍

Seriously enjoying this article! The ease of getting started with platforms like BossJL – especially the bossjl app download – makes online gaming so accessible. Finding a good slot game is half the fun, right? 😉