On November 18 2025, Cloudflare’s global network suffered a major outage — affecting thousands of websites, including high-profile platforms such as ChatGPT and X.

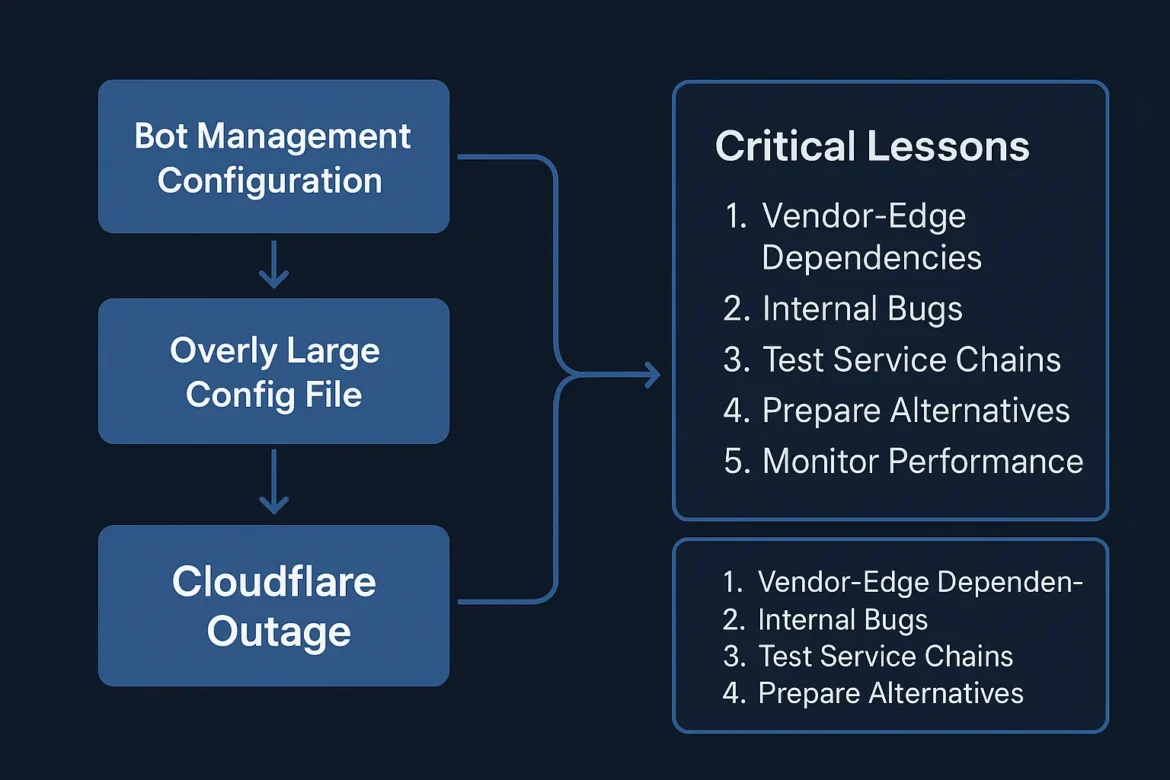

Unlike many large outages, this one was not triggered by a cyber-attack but by an internal failure in Cloudflare’s Bot Management infrastructure.

For security teams, this incident is a clear reminder: vendor infrastructure failures can be just as dangerous as attacks.

What Happened: The Bot Management Bug

Cloudflare confirmed that the outage was caused by a change to a database permission that resulted in bot-mitigation feature logic generating a configuration file that doubled in size, exceeding limits on traffic-routing software.

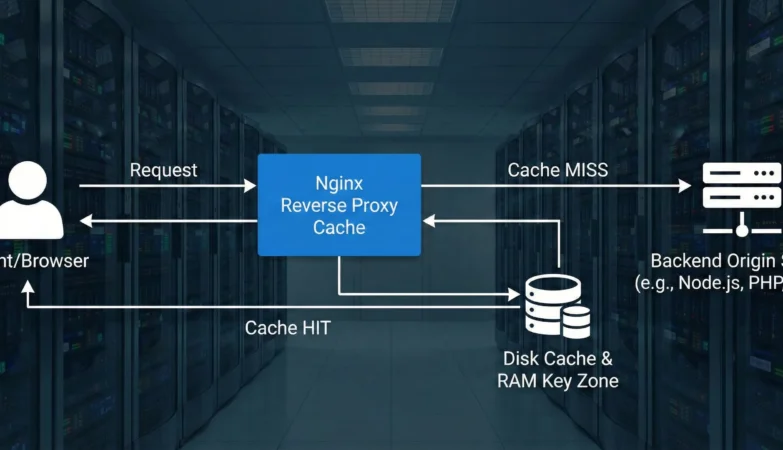

Because this oversized “feature file” was used by the Bot Management system to decide how to treat automated requests, the software responsible for routing traffic across Cloudflare’s network failed.

This cascading failure meant that many customers could not deliver traffic — resulting in widespread 5XX errors, service interruptions, and degraded performance.

Timeline & Impact

- ~11:20 UTC: Cloudflare begins seeing failures in traffic delivery.

- The configuration file issue propagates across data centres, causing core proxy software to crash.

- Multiple services go down: ChatGPT, X, major gaming platforms, transit systems, etc.

- ~14:30 UTC: Core traffic largely restored after replacing the faulty file.

- ~17:06 UTC: Services confirmed back to normal.

Impact Highlights:

- Tens of thousands of service-error reports globally.

- Vendor dependencies exposed: Many companies rely on Cloudflare’s CDN, DNS, WAF, Access, Bot Management.

- Loss of service for many platforms during business hours.

Technical Root Cause Explained

✔ Bot Management Feature File

Cloudflare’s Bot Management system uses a continuously updated “feature file” which contains rule entries about bots, crawlers, and threat traffic. A recent change to database permissions caused duplicate entries to accumulate in this file.

✔ File Size Limit Exceeded

The file size grew beyond what the traffic-routing software expected. When the proxy software attempted to load it, it failed — causing it to crash and then propagate failures across the network.

✔ Not a DDoS Attack

Initially suspected to be a DDoS or bot-attack spike, but Cloudflare confirmed the issue was internal — not malicious.

✔ Vendor Software Dependency

Because routing depends on the Bot Management module, a bug there caused cascading failures in unrelated network paths, showing how vendor dependencies can amplify impact.

Why This Matters for Security Teams

- ✅ Vendor risk is real: Even the largest infrastructure provider can fail due to internal bug, not just external attack.

- ✅ Edge services are high-value targets: If they fail, your applications might fail even if your code is secure.

- ✅ Bot mitigation systems can introduce fragility: Automated rule systems and giant config files are complex — errors can propagate silently.

- ✅ Service chain failures: DNS → CDN → WAF → Bot Management → Backend — a failure at any link can affect you.

- ✅ Incident readiness must cover “vendor failure” not just “threat actor”: Your fallback, redundancy, and monitoring must include vendor-layer faults.

Five Key Lessons for DevSecOps

- Map your dependencies — List every service you depend on (CDN, DNS, WAF, Access, bot-mitigation) and evaluate their single-points-of-failure.

- Conduct “vendor outage drills” — Simulate the scenario where a core provider fails and test how your apps behave.

- Monitor vendor error rates & anomalies — Observe not just your own metrics but the status of upstream providers.

- Have fallback routes — Ensure alternate paths or providers exist (multi-CDN, multi-DNS, multi-WAF).

- Require vendor transparency & post-mortem action — Use vendor incident reports (like Cloudflare’s) as input for improvements in your architecture.

What You Should Do Now

- Review your architecture: Which upstream vendors do you rely on?

- Check your SLAs and error-response plans: How would you respond if one failed?

- Update your incident playbook: Include vendor-failure scenarios, communications, escalation.

- Engage with your vendors: Ask about their internal change management (config file size limits, CBT, etc.).

- Monitor vendor status pages & error spikes: Early warnings can give you lead time.

People Also Ask

Q1. Why did Cloudflare outage happen on Nov 18 2025?

It was caused by a mis-sized Bot Management configuration file that overloaded routing software.

Q2. Did Cloudflare outage result from a cyberattack?

No — Cloudflare confirms the cause was internal and not malicious.

Q3. Which services were affected by the outage?

Major platforms including ChatGPT, X, Canva, and many other websites relying on Cloudflare’s infrastructure.

FAQ Section

Q1: What is Cloudflare outage explained?

Cloudflare outage explained refers to the November 18 2025 incident that disrupted major web services due to an internal configuration bug.

Q2: How can this affect my organization?

If your services rely on Cloudflare (or similar providers) for DNS, CDN, WAF or Bot Management, an outage like this can cause service disruption even if your application is secure.

Q3: What are the best preventive steps?

Map your dependencies, prepare fallback options, simulate vendor failure drills, and monitor for early vendor-problem signals.

Q4: Was a cyberattack responsible?

No, the outage was caused by internal software/configuration failure, not a cyberattack.

Explore more deep-dive posts:

- https://hackervault.tech/owasp-top-10-2025-release-candidate/

- https://hackervault.tech/how-ransomware-works-from-infection-to-extortion/

- https://hackervault.tech/inside-malware-how-to-detect-dissect-defend-against-hidden-cyber-threats/

External Authoritative Link:

- Cloudflare’s official outage blog: https://blog.cloudflare.com/18-november-2025-outage/

Conclusion & CTA

The Cloudflare outage of November 2025 is a sharp reminder: it’s not just threats outside your organization that can bring you down — internal bugs and vendor configuration issues can too. Security teams must treat vendor infrastructure with the same vigilance applied to their own code.

Trying out abcbet for the first time. Seems like they have a decent selection of games. Hopefully, the winnings will follow! Play now abcbet

777tiger6… Yeah, I’ve seen that one around. The site’s okay, nothing too fancy, but it does the job. Got a few wins, lost a few too. Just be smart and don’t go chasing your losses, alright? Give it a whirl here: 777tiger6

Who doesn’t want a lottery bonus? 82lotterybonus has definitely caught my eye offering bonuses. The platform is looking solid. Take advantage of their deals: 82lotterybonus

Heard whispers about Code78win? Let me tell ya, it’s been catching my eye lately. Gotta check them out and see what the buzz is all about! Is anyone else playing there? code78win

Trying to get logged in to 22jllogin. Seems straightforward enough. Wish me luck! Here’s where I’m logging in: 22jllogin

Thank you for your sharing. I am worried that I lack creative ideas. It is your article that makes me full of hope. Thank you. But, I have a question, can you help me?

Looking for a reliable download site and found 5777cxdownload. Anyone used it before? What’s your experience been like?: 5777cxdownload

Just tried 18jili for the first time. Honestly, not bad! They have a good selection of games, and the bonuses seem legit. Gonna keep playing and see how it goes! Go check them out!: 18jili

Laro777, man, classic vibes. Lots of familiar games. I’ve had some good runs on there. Give it a whirl if you’re feeling lucky. See for yourself!: laro777

Thanks for sharing. I read many of your blog posts, cool, your blog is very good.

Hey! I’m from Vietnam, so when I saw for88vn I had to check it out. Good selection, easy to use. Worth a shot! Try it out for88vn

811bet, huh? I’m liking the sound of that. Hoping for some big wins there. Gonna give it a spin tonight! Get your tickets: 811bet

86win? Well, that’s the goal isn’t it! Let’s see if it lives up to the name. I hope I win there! Right here: 86win

I don’t think the title of your article matches the content lol. Just kidding, mainly because I had some doubts after reading the article. https://accounts.binance.info/ur/register-person?ref=SZSSS70P

Thank you for your sharing. I am worried that I lack creative ideas. It is your article that makes me full of hope. Thank you. But, I have a question, can you help me? https://www.binance.info/register?ref=IXBIAFVY